McAfee has unveiled an “industry-leading AI model” that can detect AI-generated audio stitched into videos.

The model, dubbed Project Mockingbird, will help to combat AI voice scams and misinformation.

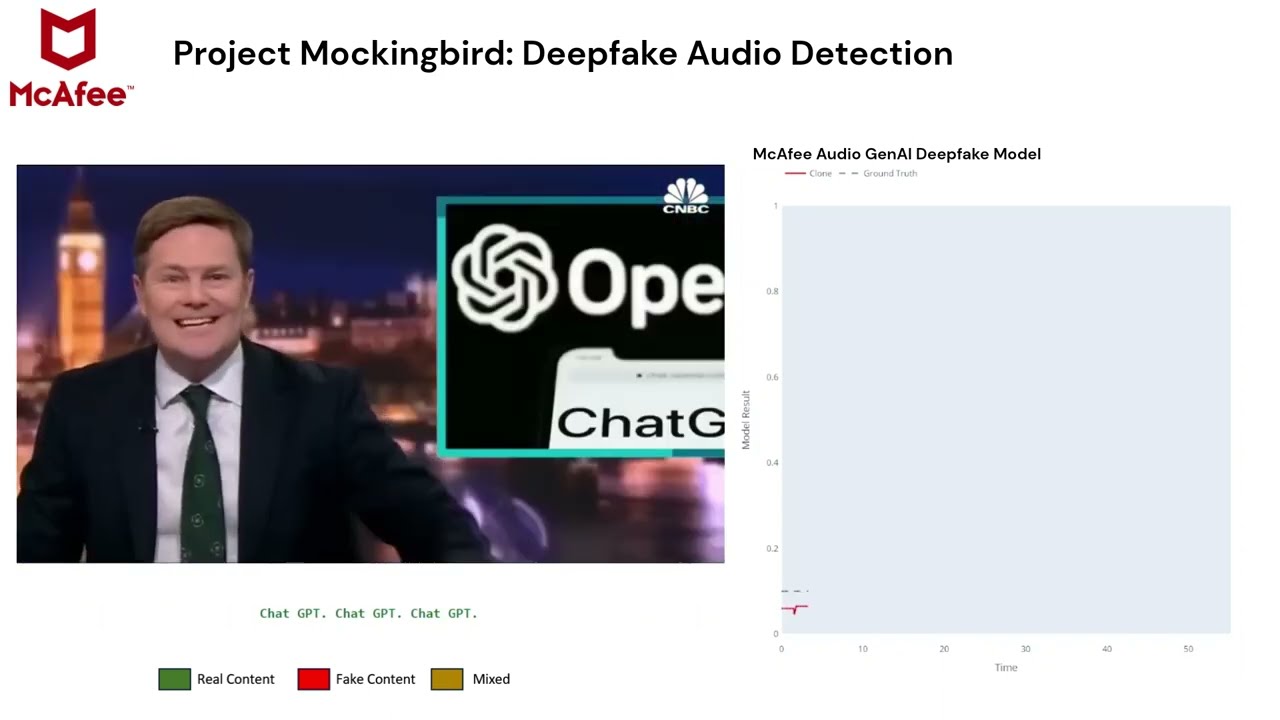

“Project Mockingbird detects whether the audio is truly the human person or not, based on listening to the words that are spoken. It’s a way to combat the concerning trend of using generative AI to create convincing deepfakes,” Steve Grobman, the CTO of McAfee, told VentureBeat in an interview.

“We build advanced AI that is able to identify micro characteristics that might be even imperceptible to humans,” he added.

McAfee plans to integrate the tech into its products. However, the company has not provided a timeline for its release.

Over 90% Accuracy Rate in Detecting Altered Audio

Project Mockingbird, unveiled at the ongoing CES (Consumer Electronics Show) 2024, was “taught” to determine whether videos or audio files have been manipulated using AI technology. Groban said it uses “a blend of AI-powered contextual, behavioral, and categorical detection models” to identify deepfake audio and has an accuracy rate of over 90%.

“The use cases for this AI detection technology are far-ranging and will prove invaluable to consumers amidst a rise in AI-generated scams and misinformation,” Grobman said.

“We’ll help consumers avoid ‘cheapfake’ scams where a cloned celebrity is claiming a new limited-time giveaway, and also make sure consumers know instantaneously when watching a video about a presidential candidate, whether it’s real or AI-generated for malicious purposes,” he added.

AI-generated videos are widespread on popular social media ad platforms like Facebook, Instagram, and Threads. According to Grobman, cybercriminals hijack legitimate accounts to spread deepfake videos.

In December 2023, McAfee predicted that AI-generated deepfakes would be used to spread misinformation ahead of the 2024 U.S. Presidential Election. And, with other major elections in India and the European Union this year, AI-generated audio and videos could challenge the integrity of democratic processes.

McAfee also predicted that scammers will increasingly use deepfakes to defraud victims. For example, cybercriminals could leverage the images and names of popular figures to endorse and promote fake online marketplaces and other fraudulent schemes.

How to Protect Yourself From Deepfake Scams

It’s becoming increasingly difficult to tell AI-generated content apart from real ones. Projects like Mockingbird and Google’s SynthID aim to solve this problem.

To protect yourself from the escalating onslaught of deepfakes, McAfee recommends that you:

- Exercise caution when sharing personal information and images online. Do not give scammers and criminals material to work with.

- Understand what deepfakes are. Learn about the signs of deepfakes, such as contextual clues (odd grammar, missing information), implausible content, and visual distortions in images or videos.

- Set social media accounts to private and be selective about who follows you.

- Set up alerts for your name to monitor unauthorized use of your likeness. Utilize identity monitoring software.

- Report any deepfakes you encounter.

- Stay ahead of sophisticated cyber threats with AI-driven security solutions.

In the video below, McAfee shows Project Mockingbird discerning between real, fake, and mixed media.

For more news, follow us on X (Twitter), Threads, and Mastodon!